Cloud Lake Storage - Files vs Tables

The cost and scale of cloud storage makes it possible to store large amounts of data in almost any format. We can build abstractions on top of this simple cheap highly available storage that lets us access it as if it were something more sophisticated.

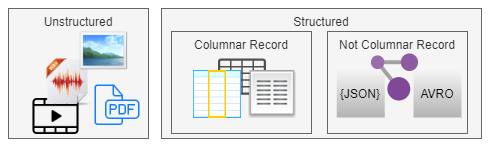

Let's divide the data into two coarse types, unstructured and structured.

Unstructured data, in this case, is any data where the content is un-typed, is not easily machine parse-able or does not store data with a tight schema. Sound, media, pictures and PDF documents are examples of unstructured data for this discussion.

Structured data has a deterministic content format and if often designed for machine consumption. Let us break structured into columnar-record and non-columnar record formats. Most columnar record formats are designed for many records per data object. Non-columnar formats may be single record per file or may not be easily flatten able into record format. We ill ignore them for the tabular discussion

Cloud storage is organized as a giant blob-space of objects where every object has its' own unique identifier. It is a global file system, except different.

Let's divide the data into two coarse types, unstructured and structured.

Unstructured data, in this case, is any data where the content is un-typed, is not easily machine parse-able or does not store data with a tight schema. Sound, media, pictures and PDF documents are examples of unstructured data for this discussion.

Structured data has a deterministic content format and if often designed for machine consumption. Let us break structured into columnar-record and non-columnar record formats. Most columnar record formats are designed for many records per data object. Non-columnar formats may be single record per file or may not be easily flatten able into record format. We ill ignore them for the tabular discussion

| Type | Structure | Multi-object | Parseable | Update-able |

|---|---|---|---|---|

| Unstructured | Irregular | n/a | No | No |

| Structured | Document or Graph | Maybe | Yes | No |

| Structured | Columnar Record | Yes | Yes | Yes |

Cloud storage is organized as a giant blob-space of objects where every object has its' own unique identifier. It is a global file system, except different.

Cloud Storage Paradigms

Cloud Data analytics operates on huge amounts of data most often arraigned in row and column format. Big Data compute treats those square entities as data tables that are joined and filtered en-masse as if the data store was a relational database. Many organizations formalize this Data Warehouse style treatment of lake data.

In general, cloud storage does not support in-place partial updates. Object portions cannot be replaced. Updates are done by replacing the entire cloud copy of the object. Big Data works around this by mapping virtual tables onto many files organized in a specific way. Each file contains some subset of the total records. Big data tooling like Spark or Presto understand this and can transparently merge large numbers of files into high performance virtual tables. They actually leverage this storage in order to execute highly parallel operations where each process executes against some subset of the objects that make up pseudo-table.

In general, cloud storage does not support in-place partial updates. Object portions cannot be replaced. Updates are done by replacing the entire cloud copy of the object. Big Data works around this by mapping virtual tables onto many files organized in a specific way. Each file contains some subset of the total records. Big data tooling like Spark or Presto understand this and can transparently merge large numbers of files into high performance virtual tables. They actually leverage this storage in order to execute highly parallel operations where each process executes against some subset of the objects that make up pseudo-table.

Big Data Cloud tables are broken up into partitions. Each partition can contain many objects (files) that in union make up all the data for that partition. This makes it possible to easily add data to slow changing tables by adding new files into the data's partition.

Same Data - Multiple Formats

Cloud storage is relatively cheap. Individual companies can store 10s or even 100s of Petabytes without worrying about running out of space. This is more data than the sum of all global storage 25 years ago.

Cheap storage means we can retain multiple copies of data in different formats optimized by consumption or regulatory needs. CSV files can be stored as objects in CSV format and as virtual partitioned tables in something table friendly formats like ORC or Parquet.

Wrap Up

Cloud based, storage, maps to objects, tables or other types based on the data mapping tier. This makes it possible to turn immutable objects into mutable (insert) tables. You can treat your data either one way or the other or both.

Video

Modification Log

Created 4/2020

Comments

Post a Comment