Improving Software Quality with Continuous Instrumentation

Better Software is No Accident

The most reliable way to build better software is to integrate quality into process with as much automation as possible. Automated measurements and metrics provide continuous public visibility to the current state and trends. It is easiest to apply these techniques to new projects but they can also be applied to existing processes in an incremental fashion:

Spending a Little More Up Front Saves Money

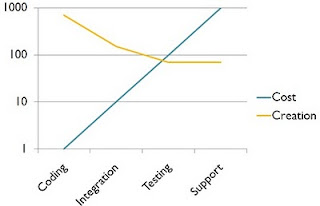

Everyone knows that defects cost more to fix when the later they are found. More people have to get involved as the code moves to upper environments and customer satisfaction or legal issues can appear if a defect makes it all the way to production.

Continuous integration and measurement adds cost at the beginning with a significant reduction in defects which actually shortens the total to-customer cycle time. Numbers from the original presentation deleted at company's request.

Continuous Improvement

Software development has a lot in common with other processes. Shorter cycles combine with honest measurement to provide opportunity for improvement and higher quality. Every cycle is an opportunity to do something better.

Automation Assisted Improvement

Quality processes involve many steps that must run over and over often for years. Team members interest in the process can fade over time as other "more pressing" problems must be handled. Automation is the best way to support a process over time.

This diagram shows the every day cycle of software development as viewed purely from the development point of view. Peer/Code reviews are a core part of the quality life cycle. Manual code inspection finds defects better than any other tool and acts as an excellent vehicle for staff training. I've worked on two large (1M LOC) projects where 100% peer review was required with a significant reduction in bad code and defects. The problem with peer reviews is that they vary in quality based on the reviewer and they are subject to management pressure when the team is behind.

Automated measurements work the same no matter what the schedule or desires of individuals. The preceding diagram shows a system where Automated static analysis teams and automated unit test analysis feeds into a report that is then used for developer coaching. Agile style continuous integration generates reports on a regular basis, often daily. This continuous visibility makes it easy for everyone to see the current state. People tend to pay more quality when metrics reports are generated and made visible automatically.

Obvious Benefits

This sample project made the following changes in the 15 months. The project already had continuous integration, via Hudson, and a code review process in place prior to introducing automated metrics (Java/Sonar)

Automated Auditing

A fair amount of work has already been done on code quality and how it can measured or audited. We went after four main areas because of the good tooling support.

Automation is the simple part. We implemented a fairly straightforward process that built on standard Agile continuous integration processes.

Sonar and other reporting systems can be augmented to tie back changes to their source. VCS integration makes it possible to track changes by

Here is an example report that shows code coverage changes for Java files based on changes in Sonar data.

Training and Expectations

Bringing the team and management up to speed is a lot harder. You'll probably start in a pretty sad place and have to ramp up.

Conclusion

The most reliable way to build better software is to integrate quality into process with as much automation as possible. Automated measurements and metrics provide continuous public visibility to the current state and trends. It is easiest to apply these techniques to new projects but they can also be applied to existing processes in an incremental fashion:

- Balance the value with the ease of automation. You may sometimes pick less than ideal metrics if they are easier to add

- Success breeds success. Do simple stuff first and get points on the board

- Incrementally improve and change. Add changes at the rate the team can absorb, often at a slightly faster rate than they would like to go.

Spending a Little More Up Front Saves Money

Everyone knows that defects cost more to fix when the later they are found. More people have to get involved as the code moves to upper environments and customer satisfaction or legal issues can appear if a defect makes it all the way to production.

Continuous integration and measurement adds cost at the beginning with a significant reduction in defects which actually shortens the total to-customer cycle time. Numbers from the original presentation deleted at company's request.

Continuous Improvement

Software development has a lot in common with other processes. Shorter cycles combine with honest measurement to provide opportunity for improvement and higher quality. Every cycle is an opportunity to do something better.

Many software projects only measure defect rates after it's too late to improve. Modern tools make it easy to track some quality measurements early in the process where changes can have a bigger downstream impact.

Quality processes involve many steps that must run over and over often for years. Team members interest in the process can fade over time as other "more pressing" problems must be handled. Automation is the best way to support a process over time.

This diagram shows the every day cycle of software development as viewed purely from the development point of view. Peer/Code reviews are a core part of the quality life cycle. Manual code inspection finds defects better than any other tool and acts as an excellent vehicle for staff training. I've worked on two large (1M LOC) projects where 100% peer review was required with a significant reduction in bad code and defects. The problem with peer reviews is that they vary in quality based on the reviewer and they are subject to management pressure when the team is behind.

Automated measurements work the same no matter what the schedule or desires of individuals. The preceding diagram shows a system where Automated static analysis teams and automated unit test analysis feeds into a report that is then used for developer coaching. Agile style continuous integration generates reports on a regular basis, often daily. This continuous visibility makes it easy for everyone to see the current state. People tend to pay more quality when metrics reports are generated and made visible automatically.

Obvious Benefits

This sample project made the following changes in the 15 months. The project already had continuous integration, via Hudson, and a code review process in place prior to introducing automated metrics (Java/Sonar)

- The code base increased 22% from 450,000 LOC to 540,000 LOC

- Unit test coverage increased from 27% to 67% coverage.

- The total amount of untested code decreased by 33% meaning that the team added tests for new code and any modified existing code.

Automated Auditing

A fair amount of work has already been done on code quality and how it can measured or audited. We went after four main areas because of the good tooling support.

- Complexity: For our purposes, this is essentially the number of code paths through a function or module. There is a lot of empirical data that shows that complex code cannot be completely tested and that modules beyond certain complexity are almost guaranteed to have defects.

- Compliance: This is the measure of the number of violations of programming rules as defined by the development teams. Java compliance tools like Checkstyle, PMD and Findbugs have hundreds of rules that range from standards oriented to bad practice to guaranteed defects. The example project has 134 rules compliance rules.

- Coverage: Unit test code cover represents the number of lines of code and the number of code branches covered by the unit tests. Coverage can't measure the quality of the tests or the validity but it does show code executed during the unit test phase of continuous integration. The example project had an 80% code coverage target and increased the number of unit tests from 2,800 to 14,000 in 15 months.

- Comments: There are a lot of opinions on the usefulness of comments. Teams argue that out of date documentation is worse than none at all. Others argue that there code is self documenting and that comments are redundant. Our standard was that every method/function and class/file had to have parameter and API documentation and that any code block that requires more than a few sentences to explain in a code review requires those same comments in the code.

- Modules with the highest complexity tend to have the most defects

- Complex modules can be impossible to fully test

- Unit testing drives down complexity

- This is a double win because you increase coverage while providing simpler code

- We had a single method with over 1 million possible paths through all the looping and conditional blocks. It was not possible that we could ever test all those paths.

- We also found that 20 problem modules in production where 12X more times complex, contained 5X more rule violations and had 25% less code coverage than our average modules. This makes sense because simpler modules are simpler and have fewer problems. Restructuring code for testing often simplified the code enough to reduce the complexity and make it possible for developers to understand what was going on.

Automation is the simple part. We implemented a fairly straightforward process that built on standard Agile continuous integration processes.

- non automated Code/peer review for every change. Small changes were 5 minutes or less but large changes could take over an hour.

- Continuous integration builds ran all unit tests on every change made. Committers notified if they had changes in the build that broke any unit tests.

- Continuous integration build runs a deployment check after all unit tests pass.

- Integration tests scheduled for twice a day.

- Daily builds invoke Sonar twice a day to generate metrics.

- Developers immediately notified of compliance, complexity, coverage or comment problems.

- The entire team receives daily reports describing quality changes for the day along with a technical debt report. These reports are part of the scheduled build process.

- Defect reports opened against any quality issues that stay unfixed over a certain amount of time.

Sonar and other reporting systems can be augmented to tie back changes to their source. VCS integration makes it possible to track changes by

- Developer

- Team

- Project

- Branch

- Change ticket

Here is an example report that shows code coverage changes for Java files based on changes in Sonar data.

Training and Expectations

Bringing the team and management up to speed is a lot harder. You'll probably start in a pretty sad place and have to ramp up.

- Continuous integration is initially used just to make sure the build works. There aren't many tests.

- There is no standard set of expectations. Create a code checklist. It will contain both coding standards and project standards based on the system architecture or tools set.

- Train everyone on continuous integration and standards. Some folks will have never seen any of the concepts before.

- Start mandatory peer / code reviews. All production code should be looked at by someone other than the developer. Be prepared because all code will fail one or more code review. Be prepared to here that there is no time to make the changes. How is it that they have time to fix the inevitable defects?

- Update the standards and review guidelines document based on the review results. There will be lots of updates in the early days.

- Train everyone on continuous integration and standards. Some folks will appear to have never seen any of the concepts before.

- Start your continuous measurement builds for compliance and test coverage

- Train everyone on Test Driven Development but expect to do Test Assisted Development in the beginning of it is an existing project.

- Facts are friendly. Generate reports and tell folks how they and the project are doing.

- Plan on rewards to help balance the frustration of forced change. It feels like more work in the beginning but eventually becomes second nature.

Conclusion

Automated tooling makes it possible for organizations to build better software with reduced manual steps. This is especially helpful for organizations in denial about the fact that they are software development shops and or companies without a natural engineering mindset. You can point out that liability and downtime are reduced by following "industry standard practices" or "following standard metrics and methods". It's never to late to start improving.

Tooling, and static and dynamic analysis tools should carry weight when choosing a language or platform. They can make a very significant difference in the quality of the code and the long-term maintainability of applications. Basic automated tooling is not a panacea because it does not fully cover the problem space. Bad design and bogus tests for coverage often require peer review. Requirements verification, security audits and end-to-end testing need their own tooling and metrics.

I found this blog very informative. Thanks! The continuous improvement process enables the manufactures to achieve a world-class status by reducing equipment downtime and maintenance costs, plus providing better management of the equipment life cycle. Now a days, there are many companies that are providing the service of continuous improvement and OEE. One such recognized company, named as, Rzsoftware, is providing the service of OEE systems that helps in measuring the health and reliability of a process relative to the desired operating level.

ReplyDeleteThere are some negative effects to the implementation of software metrics. These effects can be considered similar to the effects of all other types of business and functional metrics. One of the most crippling effects is the "What you see is what you get" effect. read here

ReplyDelete