Scaling Out Data Using In Memory Stores/Caches

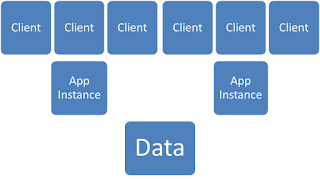

Standard App Topology

This diagram shows a basic web app with two app servers. It is a fairly straightforward architecture where the two app servers are used for load balancing and fault tolerance in case one server goes down. A single data server or cluster is sufficient. Most folks would probably actually have a web server tier between the clients and the App Instance tier but I'll skip it so some of the MS folks feel at home.

This diagram shows a basic web app with two app servers. It is a fairly straightforward architecture where the two app servers are used for load balancing and fault tolerance in case one server goes down. A single data server or cluster is sufficient. Most folks would probably actually have a web server tier between the clients and the App Instance tier but I'll skip it so some of the MS folks feel at home.

This diagram shows what happens when you start to increase the number of clients. One of the beauties of the commodity server architecture is that you can continue to add application server instances to handle the additional load. Data servers can be clustered but there is a finite limit to the data tier size and performance. You end up with an inverted pyramid where you application is teetering on top of a narrow data tier.

NoSQL databases accelerate applications in two ways. They increase the performance of the data tier by leaving the data in the applications native data model. This means data, once put into the cache, can be directly used by the application instead of constantly joining multiple tables and marshaling the data as required by an RDBMs. This reduces the work done to create your data structures. You can also make use of the fact that inexpensive large-memory machines are readily available making it a much cheaper way of scaling out the database when compared to the expensive hardware of high end databases. Systems using in memory shared-nothing databases that are designed to scale to Internet type volumes and spikes and can add capacity relatively easily. The main benefits are:

NoSQL databases accelerate applications in two ways. They increase the performance of the data tier by leaving the data in the applications native data model. This means data, once put into the cache, can be directly used by the application instead of constantly joining multiple tables and marshaling the data as required by an RDBMs. This reduces the work done to create your data structures. You can also make use of the fact that inexpensive large-memory machines are readily available making it a much cheaper way of scaling out the database when compared to the expensive hardware of high end databases. Systems using in memory shared-nothing databases that are designed to scale to Internet type volumes and spikes and can add capacity relatively easily. The main benefits are:- More Storage is available by aggregating RAM / Storage across nodes.

- Higher Read Throughput , I/O because RAM is faster than any Disk including SSD

- Higher Write Throughput, I/O because RAM is faster than any Disk including SSD

- Higher Transaction throughput by making use of more CPUs/Cores

- Lower Latency because more service nodes are available to service independent requests

- No data assembly overhead because it is already in the desired structure in the DB

No-SQL are amazingly fast at primary key based retrieval. Systems like Gemfire also support attribute indexing of arbitrary attributes inside the graph graph. This makes the system fast for both key based retrieval and index based searches. Partitioning and Replication also helps to improve performance over single machines or small cluster environments.

| Partitioning | Replication |

|

|

Scenarios

Session State

This is the most basic of all uses for in-memory data servers. High volume web applications can use a high performance backing store to hold session data. They save the session data to cache on every page request allowing application servers to only hold in-flight session data at any given time. This reduces the server memory footprint making system memory management easier. Extracting session data from the application nodes simplifies session failover and load balancing because user requests can be routed to any server with no loss of state. Most session caches keep redundant copies of all data lessening the chance of user data loss.

Data Accelerator

The in-memory data store sits between the application and database. Read operations first check the cache which fetches the data from the underlying RDBMs if there is a cache miss. Data is saved to the database through the cache on a write-through or write-behind basis. This is really an in-memory cache more than a system-of-record data store. In-memory stores like Gemfire can be integrated into persistence models like Hibernate where it can act as an L2 cache. Some applications will use custom caching behavior. I worked on a system where we wrote custom code to IBM MQ calls as if it were a database. In our case, reads from MQ were cached and writes caused a cache flush.

Consistent Data Store

This is a little harder to describe. The idea is that Gemfire and other in-memory data stores are designed to synchronize their data across many redundant or replicated nodes. This means you can scale up the data store to hundreds of nodes rather than 3-4 in a typical database. The system can also synchronize data operations to the database in either a write-through or write behind fashion. Some large data shops use the the memory store as their primary transaction database. They use the RDBMS for reporting or as a warm and fuzzy safety net that implements their traditional data management policies. Cluster replication to remote data centers is simpler and higher performing that database replications so it can also be used to fill DR databases in other data centers.

This is a little harder to describe. The idea is that Gemfire and other in-memory data stores are designed to synchronize their data across many redundant or replicated nodes. This means you can scale up the data store to hundreds of nodes rather than 3-4 in a typical database. The system can also synchronize data operations to the database in either a write-through or write behind fashion. Some large data shops use the the memory store as their primary transaction database. They use the RDBMS for reporting or as a warm and fuzzy safety net that implements their traditional data management policies. Cluster replication to remote data centers is simpler and higher performing that database replications so it can also be used to fill DR databases in other data centers.High Performance

High performance is attained through the use of redundant data nodes, through the partitioning of the data store and through the use of in-sutu or "in place" processing. Data replication means the data exists on multiple nodes. The database can spread queries out across the nodes. Partitioning means the data is subdivided across multiple nodes. Queries can run in-parallel across the nodes and then aggregate the results or they can be isolated to individual nodes, accessing only a subset of the data, based on the partition keys. Both approaches provide huge boosts in performance often almost linear with the number of nodes available. Most of the servers have the ability to run code inside the servers so that the processing is co-located with the data. This is better than stored procedures or something like Oracle's embedded java because the code can operate on the data model's native level. Tools like Gemfire are written in Java and can execute Java code local to each node. Other systems often have similar concepts.

High performance is attained through the use of redundant data nodes, through the partitioning of the data store and through the use of in-sutu or "in place" processing. Data replication means the data exists on multiple nodes. The database can spread queries out across the nodes. Partitioning means the data is subdivided across multiple nodes. Queries can run in-parallel across the nodes and then aggregate the results or they can be isolated to individual nodes, accessing only a subset of the data, based on the partition keys. Both approaches provide huge boosts in performance often almost linear with the number of nodes available. Most of the servers have the ability to run code inside the servers so that the processing is co-located with the data. This is better than stored procedures or something like Oracle's embedded java because the code can operate on the data model's native level. Tools like Gemfire are written in Java and can execute Java code local to each node. Other systems often have similar concepts.Multi-Master & Disaster Recovery

High speed in-memory data stores support WAN gateway and multi-cluster configurations. This makes it possible to bring data and applications closer to users by spinning up geographicaly dispersed data centers in an all data centers live configuration. Data can be updated in any data center and is replicated to the others. The same feature can be used to replicate data to a Disaster Recovery site without any complex configuration or additional tooling. Tools like Gemfire support partial region/table based replication so different clusters can have filtered views of the data.

High speed in-memory data stores support WAN gateway and multi-cluster configurations. This makes it possible to bring data and applications closer to users by spinning up geographicaly dispersed data centers in an all data centers live configuration. Data can be updated in any data center and is replicated to the others. The same feature can be used to replicate data to a Disaster Recovery site without any complex configuration or additional tooling. Tools like Gemfire support partial region/table based replication so different clusters can have filtered views of the data. Common Misconceptions

- Caches must be in-process with the application to provide any performance boost. Using local in-process caches provides the best performance but limits the size of the data and complicates system tuning and analysis. Using local on-box caches provides the second best performance and is used by many teams. It is shied away from by teams that want tier isolation. Standalone cache node clusters provide a lot of flexibility and simplify scale-out. Most large data implementations run stand-alone cache servers and get very high performance.

- All of the benefits come from being in-memory. The data stores support high speed key based retrieval of arbitrary and potentially complex data models. Overhead can be significantly be reduced by removing the need to map between relational and application data models.

- Reliable Data Stores Require Complex and Expensive Infrastructure. In memory data stores/caches can run on relatively inexpensive commodity hardware. Many of them can be distributed and run as part of the normal enterprise application deployment process. Systems like Gemfire are distributed as Java Jar files making them "run anywhere" servers. Systems like Microsoft AppFabric are more heavy weight requiring OS installs and configuration making deployment and re-deployment significantly more complicated.

Quick Notes on Gemfire

VMWare Gemfire , formerly Gemstone Gemfire, has these features:

- Java Based In-memory Data Store or cache

- It supports Arbitrary data structures, object graphs and simple RDB like flat data

- Wicked fast key based retrieval of arbitrarily shaped data

- Reliability through Data Replication and WAN gateway replication

- Performance scale-up through replication

- Data scale-up through Data Partitioning

- Mulitple Regions or Tables in the same server each of which can have different data policies.

- The ability to mix and match partition and replication strategies

Components and Topoligy

The standard components include

- Locator A process used by clients to find server nodes. Directory assistance for finding nodes that have the data or nodes that are available for service.

- Server An actual data management process. Cluster store their data across multiple Server Nodes. Servers can save data to a RDBMs in a write-through fashion or they can persist their data to a local disk. The latter is often used where too much data exists to stream to a single data store.

- Client Consumers of the cache data. They can be straight clients or they can be caching clients where the Gemfire client code can be configured to retain data local to the client process for performance tuning

- WAN Gateway The feature or process that supports replication of data across data centers or clusters. This also provides the hooks for Database write-behind, essentially data replication to a database instead of other cluster

- JMX Agent A special process that makes cluster data available to outside monitoring tools through the Java JMX itnerface.

The following diagram shows a two cluster Gemfire configuration where each cluster has two servers. Each cluster has two regions, one partitioned across the servers and one replicated across the servers. The partitioned data might be something like trip reservations while the replicated data might be the customer information that essentially joins to the reservations. The two clusters are replicated across the WAN gateway which is also doing write-behind to the local RDBMS.

Simple Example

A two node cluster , Node A and Node B. There is customer data and flight Information about the scheduled flights. Both customer and flight information exist on Node A and Node B. There is a third data set which is a list of reservations and seat assignments. This is essentially the join of customer and flights data that represents a reservation. There are a lot of reservations because the flights occur on a daily basis so we partitioned the reservation / seat information so that 1/2 is on each Node A and Node B. Key based retrieval is of course always possible but this schema makes it possible to do very fast queries or retrieval based on joins between the three tables. Partitioning means that the two nodes can each search 1/2 the flight data simultaneous to each other. The picture doesn't show the locators required for cluster function.

Thanks for taking the time to discuss this, I feel strongly about it and love learning more on this topic. If possible, as you gain expertise, would you mind updating your blog with more information? It is extremely helpful for me. BY - Chat Support Services

ReplyDeleteI am actually looking at this particular pattern now in a distributed solution.

ReplyDeleteI'm going to be using a bus based solution for a high availability message transform solution.

In my case I have a couple of WCF endoints which I can't change. I then use NserviceBus with RabbitMQ as my transport and Redis as my in memory working dataset processing store.

After initially receiving a message I parse the message and persist it to disk via a command.

Additionally I have orthogonal processing like audits etc. Dispatching a keystone message with the parsed header data and a routing slip via an ICommand to my NSeviceBus.

I have a service which takes the original data structure JSON and push into a distributed Redis in memory store. Using the routing slip pattern or durable saga you can then retain direct access to your in memory data store. I use this as my working set for message transformations based on my routing slip activities. There are several really good C# libraries for accessing Redis.

Workflow is captured and events are dispatched based on the routing slips event model.

I figure the .3ms latency expected in most enterprise LAN's is much better then the network to disk I/O of any persistent database.

If I loose integrity of my transformation I can either replay or start from where I left off.

Ideally you want to keep your working data set smaller. But in my case it's fairly large sometimes up to 2MB. I will be changing the structure of our inbound messages hopefully soon. This solution will allow us to flexibility of more through put and greater stability under load.

Great article Joe.

This comment has been removed by a blog administrator.

ReplyDelete