Behavior Driven Acceptance Criteria for Features, User Stories and Tests

Behaviour Driven Development (BDD) is an evolutionary outcome of Test Driven Development

(TDD) and

Acceptance Test Driven Development

(ATDD). BDD focuses on business values and is designed to remind everyone that

business outcomes are the top priority.

Features and User Stories also have technical constraints, dependencies, and

Non Functional Requirements. They are not part of the

Behavior Driven Acceptance Criteria.

- Drive repeated iteration around the desired business outcomes. Projects can often get distracted and drift away from the desired functions.

- Think “from the outside in”. Focus on the behavior that directly targets the desired business outcomes. Do not start inside a system or with a discussion on how tools can be used.

- Use a standard notation for describing behaviors and acceptance criteria. Select a form that can be understood by Subject Matter Experts and delivery. Some teams pick a syntax that is friendly to automated test tools.

- Apply these techniques at the top, working down into the different software layers.

This section includes concepts promoted by the Agile Alliance

Related Links

Mine

- Video: Behavior Driven Acceptance Criteria for Features, Stories, and Tests

- Video: Explore Behavior Driven Testing with public websites

- Blog: Acceptance Criteria are the true Definition of Done

- Blog: Behavior Driven Acceptance Criteria for Features, User Stories and Tests

Other

- https://www.agilealliance.org/glossary/bdd

- https://en.wikipedia.org/wiki/Five_whys

Backlog Refinement

This discussion assumes you are following some type of agile refinement

process. You start with high-level business functionality that you expect

to deliver in one or more Program Increments. My experience has been

to create Backlog Features that represent a

single program increment. Those features are then broken down

into User Stories that delivery teams size to be delivered in

a single iteration. That iteration is called a Sprint if

following Scrum practices.

Acceptance Criteria: Features and User Stories

Features contain a business value statement, a list of requirements,

dependency linkages, enablers, and a set of

Acceptance Criteria. Those Acceptance Criteria determine the

business behavior that needs to be completed to meet the

Definition of Done (DoD). Business behavior at this

level should be specified as business behavior that is independent of any

underpinning technology.

Acceptance Criteria Refinement is one of the biggest challenges when

pushing a Feature into a Ready state to be picked up and

worked. It is where the refinement team reaches an agreement on the scope

for this Feature in this Program Increment.

Feature level Acceptance Criteria are decomposed as the Feature is

broken down into the iteration level User Stories. Delivery

teams work with business analysts to understand the

expected behavior and business outcomes. They create inwardly

facing test suites based on the Use Story level

Acceptance Criteria. The entire test suite is derived from

the User Story Acceptance Criteria.

There are essentially two ways to specify the ARs. It can be a detailed specification that provides the exact steps or it can be a behavior-based definition. Detailed specifications can be complicated to maintain in the documentation and can confuse implementation with function. Behavior-based ARs describe the business process leaving it up to delivery to figure out how that maps to the actual test interaction.

Features and User Stories should use the same BDD DSL. This empowers the business to ensure a business value focus. Product and tech are both involved with Feature Refinement and User Story definition and can both use the same language.

Acceptance Criteria: Mapping to Test Cases

User Story Acceptance Criteria directly map to the test

cases. Sometimes additional acceptance criteria are generated as

delivery explores the story and identifies gaps or misses. TDD and

ATDD often take an implementation view at this point. BDD uses the same

specification language for Features and User Stories.

- Product Owners and businesses contribute the examples that describe the business outcomes always from the PoV of the desired business outcome and not how the outcome is implemented.

- It is the delivery team's job to automate tests that can convert those business objectives into the actual technical steps required for the test.

Acceptance Criteria: Testing Patterns

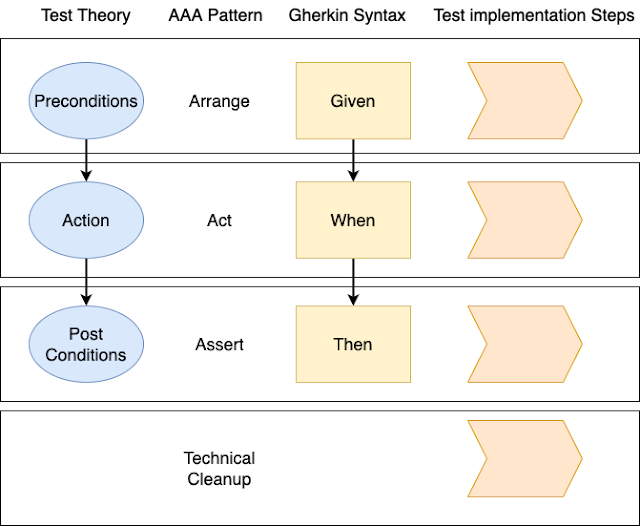

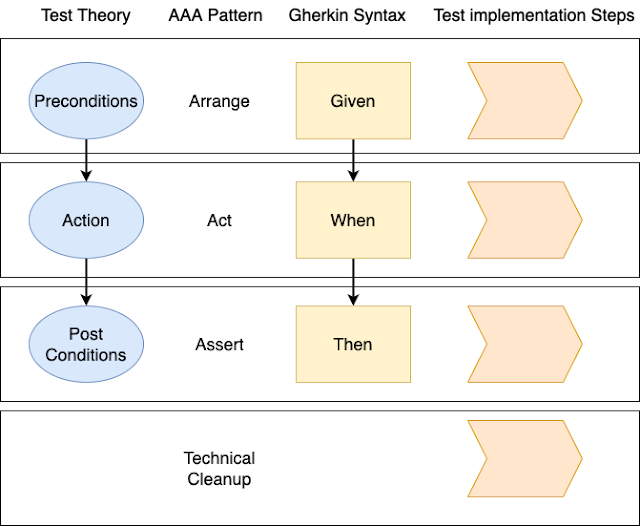

BDD Domain Specific Languages (DSL) tend to align with well-known

testing patterns. Those patterns work in many situations outside

software development.

Test and example specifications generally contain 3 phases. There is

the Precondition or the expectation phrase that describes any pre-test

state. There is then the action phase where business functionality

is invoked or applied. The final stage is the analysis phase which

verifies the desired outcome. There can be an arbitrary number of work steps in each Given, When, or

Then high-level phase.

Software systems exist that can read Gherkin Syntax and turn those into

tests that are executable by software testing tools. SpecFlow and

Cucumber are two example tools. Business outcomes are defined with

Given/When/Then in some human readable fashion. Those specifications

are converted into Given/When/Then function stubs. Developers then

fill in the stubs to do the actual detailed work. Those stubs might

be code calls for software modules, API invocations for service testing,

or web calls for web user interface testing.

Acceptance Criteria in Gherkin Syntax

BDD is also sometimes known as Specification by Example.

Gherkin syntax is a specification Domain Specific Language that works at

different levels of granularity, for both Feature and User Stories.

Gherkin specification feels like more of a set of example behaviors than

some type of abstract free form requirement definition.

Gherkin follows the Given/When/Then syntax described

above.

Feature

BDD definitions captured at the Feature level should represent

business outcomes at the value stream level. Ex: Trademark holders should be given priority when people ask about the

trademark

Scenario: Trademark owner sites should have priortiy with internet searches

Given I search the internet

When I use a term

Then Results should include the trademark holder's site

Notice that this specification is implementation agnostic. It can

define a User interface behavior or an API or some type of legal

process.

User Story

BDD definitions captured at the User Story level describe

the desired business outcome for this increment's deliverables.

ARs at this level are tightly bound test scenarios but should still be

described in the terms of desired business outcome. Ex: Trademark

holders often own a domain name that contains the trademark

Scenario: Search engine results include links in domain with that name if it exists.

Given I search the internet with a search engine

When I search with a term

Then There should be at least 1 links with the search term in the domain

Behavior-Driven Test Definitions

Test scenarios should use the same phrasing when describing identical

functionality. This reduces the cognitive load of understanding the

implications of phrasing differences. These two tests are similar and

share exactly the same Gherkin Syntax.

Scenario: Search with webcrawler returns results in domain

Given I search the internet using site "webcrawler"

When I use the term "microsoft"

Then There should be at least 1 links with the "microsoft.com" in them

Scenario: Search with altavista returns results in domain

Given I search the internet using site "altavista"

When I use the term "apple"

Then There should be at least 1 links with the "apple.com" in them

Cucumber and Specflow test automation re-use Feature Steps if they have the same syntax. This means the two tests above use the exact same steps and thus the 2nd test requires no additional software coding.

Implementation of highly technical Behavior-like Test Definitions

This test definition reads like it was written by someone who knows the

implementation details. Behavior definitions should be implementation

agnostic. This puts us in a situation where the same Behavior definition

may be used for different purposes or levels of testing. Automated

must know all the details and will implement everything called out

here. Those details are hidden inside the steps.

Scenario: Search with Google returns results in domain

Given I want to search for something on the internet

When I open Chrome

And I type "www.bing.com" into the url bar and hit enter

And I click on the search box

And I enter "facebook" into the search field

And I click on the search button

Then The browser HTTP code should be a 200

And The returned page should have at least 1 href "facebook.com" in them

And "facebook" should be in the title bar of the results page

Videos

Created 2022 07

Comments

Post a Comment