Using OpenTelemetry to send Python metrics and traces to Azure Monitor and App Insights

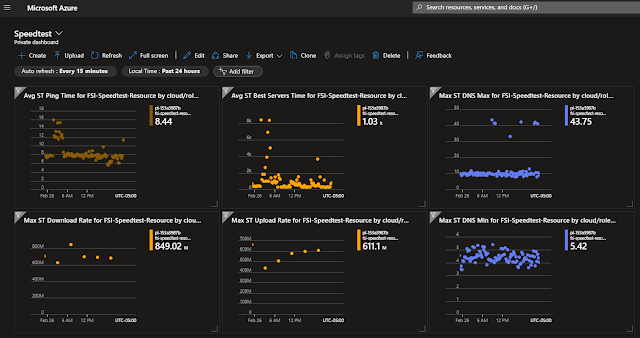

Microsoft has updated its Python libraries that let us send 3rd party library invocation metrics and traces and application-specific custom metrics and traces to Application Insights. Their OpenTelemetry Exporters make it simple to route the standard Python OpenTelemetry library observations to Application Insights. You bootstrap the Azure config and credentials and then use standard OT calls to capture metrics and trace information. SpeedTest network Metrics in Azure using OpenTelmetry A couple of years ago I wrote a program to record the health of my home internet connection and store that information in Azure Application Insights. I did this with a Python program that leveraged Microsoft's OpenCensus Azure exporter. That library is becoming obsolete with the move from OpenCensus to OpenTelemetry. That project has now been ported to Open Telemetry! It involved 6 hours and 40 lines of code that was preceded by 20 hours of whining and internet surfing. The ...