Running the Microsoft ALM TFS 2013 (Hyper-V) Virtual Machine in VMWare without Persisting Changes

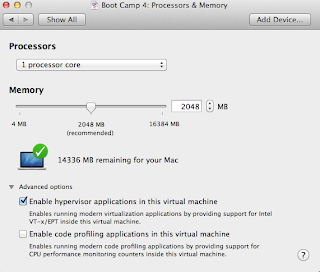

This blog describes how to run the Microsoft TFS/ALM Hyper-V virtual machine in one of the VMWare hypervisors (Player/Workstation/Fusion). You can use the same concepts for any other virtual machine/appliance. The 2013 RTM ALM image comes with a set of TFS and Visual studio labs . The virtual machine and the labs have a couple expectations: It comes as a a Hyper-V VHD running an evaluation copy of Windows Server 2012. The work items and other information are "point in time" information. This means sprint, iteration or other information can only be used in the labs if the VMs time is set to a specific date(s). The machine resets the time to the same specific date and time on every reboot. This lets you re-run the exercises. Pay attention to the places the way the time is set if you wish to use this process for Virtual Machines anchored at dates and times other than the ones used for the TFS 2013 RTM ALM image used in this post. The labs work bes...