Data Lakes are not just for squares

Columnar-only lakes are just another warehouse

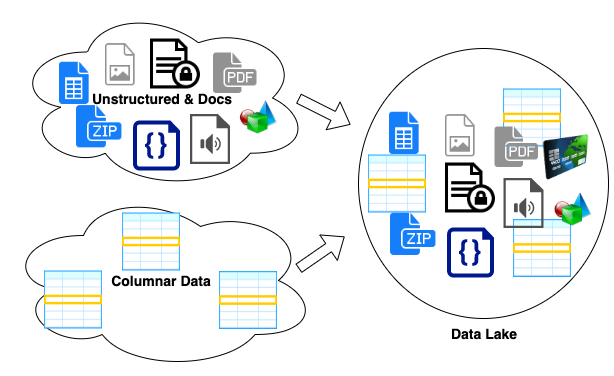

Data Lakes are intended to be a source of truth for their varied data. Some organizations restrict their lake to columnar data, violating one of the main precepts behind Data Lakes. They limit data lake to be used for large data set transformations or automated analytics. This limiting definition leaves those companies without anywhere to store a significant subset of their total data pool data.

Data Lakes are not restricted

Some enterprise data lakes make the data more usable by storing the same data in multiple formats, the original format and a more queryable, accessible format. This approach exactly preserves the original data while making more accessible.

Examples of multiple-copy same-data storage include.

- CSV and other data that is also stored in directly queryable formats like Parquet.

- Raw data sets are combined into a single partitioned data set to simplify queries.

- Compressed formats or archives that are also stored in uncompressed formats.

- Encrypted data that is also stored in unencrypted format after being scrubbed or tokenized

- Images that are stored in both native and OCR extracted text formats.

- Documents that may be converted to denormalized queryable formats.

The Catalog is Linchpin

Well cataloged data lakes give companies a way get access to all their data. Data lakes are only useful if they have a good searchable catalog that includes high fidelity accurate metadata. Good catalog info includes:- Data lineage source systems and pointers to any transformations.

- Data ownership

- Format version numbers

- Metadata tags that make it easier to locate the data set or tie it to other data and systems.

- Schema definitions that describe available attributes

A failure here results in a Data Lake that is nothing more than a data dumping ground.

GDPR, CCPA and the downside of duplicate data

Traditional, legal data retention policies and new privacy rules combine to make data management more complicated. This means that many data sets must support Upsert type operations to transparently remove rows or columns from data. Data scrubbing must be supported at the storage level, the data set organization level. It is hard to retain integrity of data entities and attributes when are stored in multiple places.

You're broken when...

You do not have a wholistic data warehouse strategy when people think that only row/column data goes in the lake or that all documents should be stored as a blob field in a single row row/column data set.

Comments

Post a Comment